News From the Future #1

The Experience Machine

Republished from the November 2029 issue of Wired, with permission.1

Eliud looked a little bit dizzy. He confessed as much before we started our third virtual interview — I was in San Francisco, and he was in Nairobi. “The new work is more tiring,” he explained. “You stop trusting your eyes.”

In the last ten years, venture capital has steadily transformed Kenya into a so-called “Silicon Savannah,” spurred by the country’s vast supply of unemployed but well-educated workers. Controversially, many of the interloping firms — in particular, OpenAI — have hired Kenyans en masse to serve as ‘humans in the loop’ for AI training.

Their job is to label the contents of visual, textual, auditory and other forms of data to help neural networks extract the relevant patterns. An employee might look at several hundred pictures of food, for instance, and note all the pictures that display soup.

The work is low-paying and exhausting. For struggling workers, however — and for companies that want to develop state-of-the-art AI — there is no alternative.

But recently, centers like the one that employs Eliud have been retrofitted for a new kind of training. Eliud still sits down at his computer every day. But the text files are gone. Instead, he puts a net of electrodes on his head. For the next ten hours, the electrodes stimulate his brain for periods ranging from three to thirty seconds. When they stop, he tells the computer what he experienced.

Eliud was disturbed by this development. He acquired my email address from a friend. I had interviewed her previously for a story about the Kenyan government’s use of machine vision algorithms to identify and arrest youth activists.

“Can you tell me what happened today?” I asked.

“Redness,” he said. “Just a wall of color. Then a very painful pinch in my shoulder.” He rubbed his neck. “Some music, later. But simple music, not good. One note going on and on and on. Oh, and I smelled trees during the music. Usually it’s only one thing. But sometimes there are more.”

OpenAI, it seems, is trying to build an experience machine.

In 1974, Robert Nozick imagined a machine “that would give you any experience that you desire.” His vision was hypothetical; he used it to demonstrate the pitfalls of hedonism. But in principle, it is no longer hard to design an experience machine.

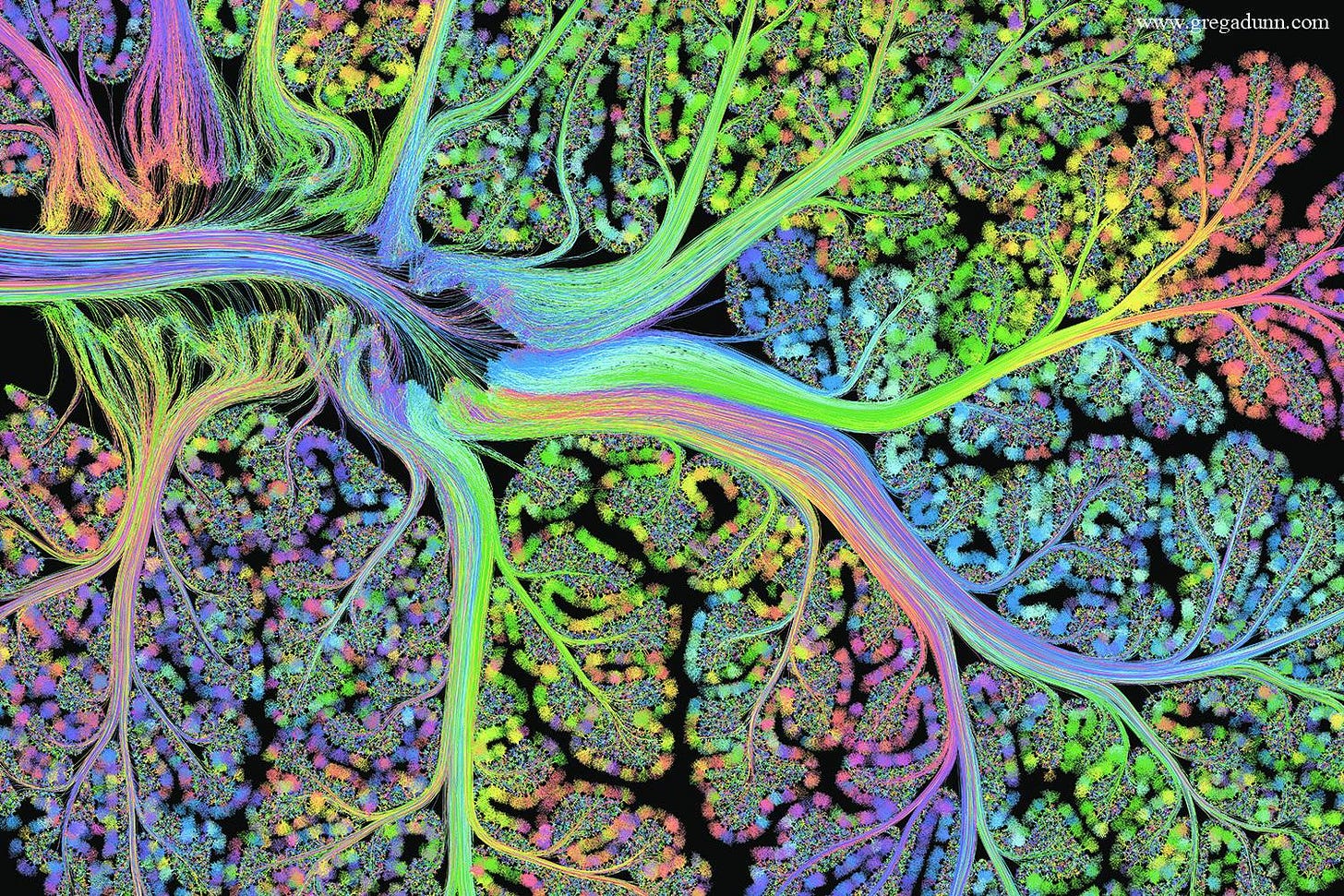

First, recall that we can induce different conscious experiences by stimulating the brain with electrical currents. Wilder Penfield, a legendary neurosurgeon, is usually credited with this discovery. Seventy years ago, he placed electrodes directly onto the temporal cortex of an epileptic patient. She was fourteen years old; history knows her as J.V. When Penfield stimulated one location, J.V. said: “I imagine I hear a lot of people shouting at me.” In another: “I saw someone coming toward me, as though he was going to hit me.”

So a functional experience machine would work by tracking which patterns of electrical stimulation produce particular experiences in the brain. If a machine possesses that map, and a tool that could safely apply it, a user would be able to trigger experience on demand.

In practice, though, this blueprint faces two difficulties. One is mechanical: the machine would need detailed control over the brain to cover the vast varieties of possible experience. But this is arguably just a matter of building better and more particular tools — a hard problem, but not an impossible one.

The (seemingly) impossible problem is scientific: how, exactly, are we supposed to precisely map the varieties of experience onto different parts of the brain? To figure out the sounds made by strings on an instrument you’ve never played before, you can just hit the strings and listen. But what if the instrument has 100 billion strings?

During the winter of 2027, Dmitriev Levin started sleeping in his office. I met him on the Charles Esplanade a few months later, when the Boston cherry blossoms had just begun to bloom. His gait was short and careful. He stared at the ground when he spoke.

“A bad divorce,” he explained. “I brought an old futon out of storage.”

Levin is a renowned neuroscientist — his lab has been responsible for many of our most fundamental insights into the mechanisms that underly consciousness. Around the time he brought out his futon, OpenAI had successfully established new data centers in Texas, Mexico, and Ohio as part of their ‘Stargate’ project. Simultaneously, the wave of interest in artificial intelligence had finally begun to seep into his field, not only philosophically but methodologically.

“I never cared if it was conscious,” Levin told me. “Too speculative for debate, yes? But I am always looking for new empirical techniques. That seemed more interesting.”

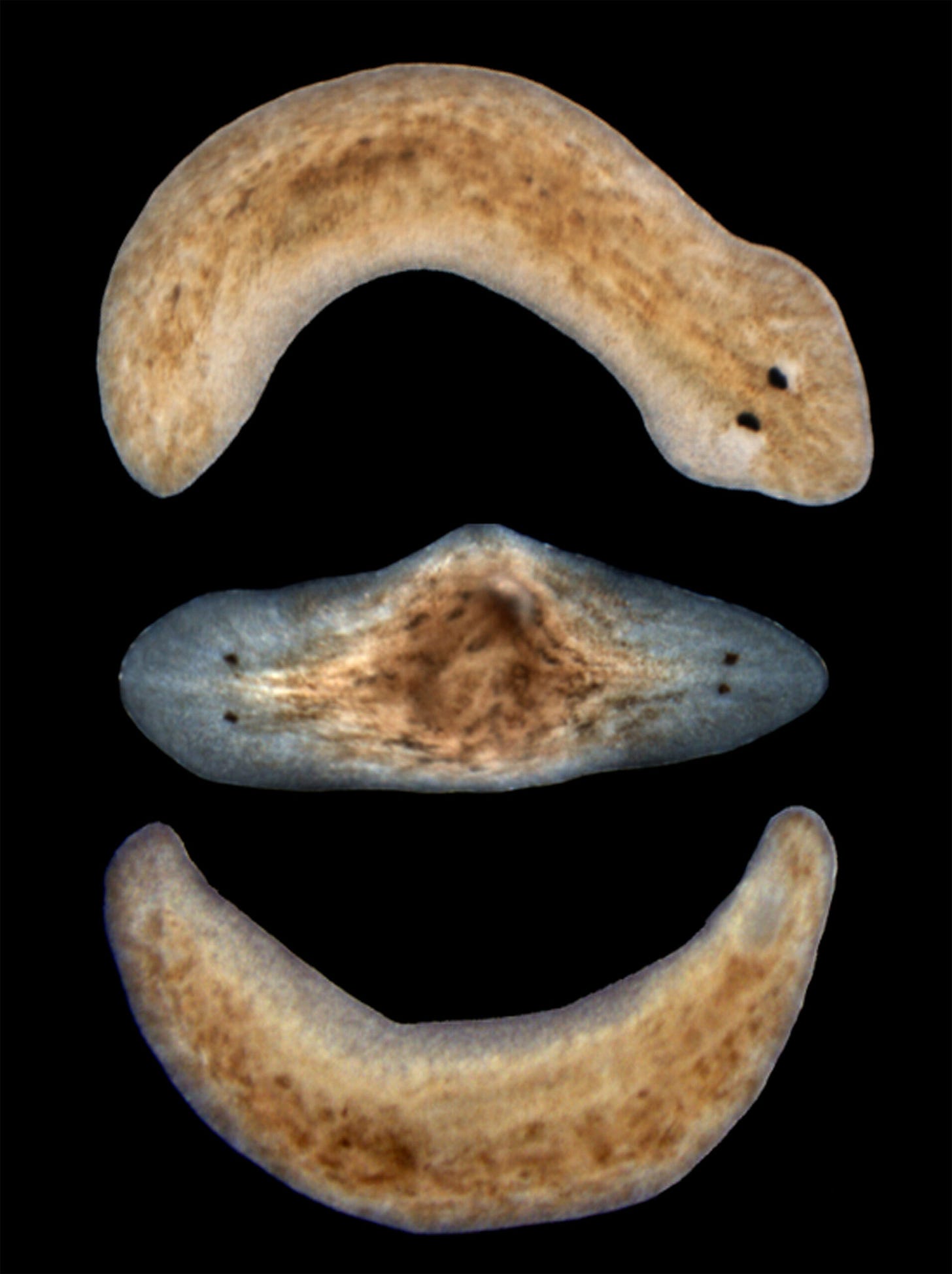

Levin was especially interested in a new approach from cell biology: prompt-to-intervention models. Several prominent biologists had been arguing for years that one could command a group of cells without guiding the movements of every individual cell. They analogized the idea to the power of a programming language; if you can use Python, you don’t need to write directly in the much more confusing machine code that underlies it. Ideally, we could tell the cells in an amputee’s stump to make a new arm using high-level instructions, and they would figure out how to do it themselves at the low level.

Michael Levin, a distant cousin of Dmitriev, had referred to this hypothetical bio-Python as the “anatomical compiler.” Dmitriev said he and Michael had never spoken; nonetheless, he found the idea inspiring. And in August of 2025, Michael and his colleagues released a paper that would inadvertently lead Dmitriev to a blueprint for the experience machine.

The paper detailed a new technique for cellular control. These models allowed users to command a cell culture through natural language commands, like “cluster” or “scatter.” A neural network would then try to translate the command into low-level instructions for a simulated cell culture.

Over many attempts, the network would learn how to accurately map commands onto behavioral outputs for the culture. Consequently, the final model could take a command like “make the cells form a tight cluster in the center”; with no further intervention from a user, the model would translate that into cellular movement. Hence the name: a prompt leads to an intervention, with a neural network playing the role of middleman.

The paper didn’t get very much attention. But Dmitriev, leaning against the lightless windows of his office, read it several times over. His cousin could use artificial intelligence to tell groups of cells how to behave, even if he didn’t know how his commands were translated by the network into practice. Why couldn’t Dmitriev do the same thing with groups of neurons?

Good artists borrow; great artists steal. “I am shameless,” Dmitriev admitted. “But Mike didn’t mind. He emailed me to say he liked the paper.”

In an article published a couple months later, Dmitriev proposed a P2I model for conscious experience. He suggested that a neural network could be trained on data about the subjective effects of stimulating the brain in different ways. Take a subject, electrify their brain, and ask them to describe what they felt. Then try a new stimulus pattern, and ask them to describe the experience again. And again, and again, and again.

Eventually, if the neural network is paired with a sufficiently advanced controller, it should be able to convert natural language into patterns of neural stimulation. You could tell it “Show me a pink tree,” and using an internal map between natural language and patterns of electrical stimulus, it would galvanize the brain to do exactly that.

Of course, neither the user nor the designer would ever get to see the map. Dmitriev’s proposal, brought to life, would bring his field no closer to understanding the neural correlates of consciousness. But that didn’t restrict the potential for such a machine to provide detailed control over conscious experience. Programmers don’t write in machine code; pianists don’t play the cochlea; to use the experience machine, you wouldn’t need to know how it works.

I asked Dmitriev if he had thought about whether someone might actually implement his idea. He considered it, briefly, then shrugged. “No,” he said. “Not really. It just seemed true. So I did it.”

So he did. There was little response from the press; the concept was too speculative to make many waves. By the time Eliud contacted me with his story, Dmitriev had moved back into his apartment.

When Eliud first tried the experience machine, he thought he had gone insane.

“It looked like a kofia,” he said. “But made of a very fine material. Like chainmail from Lord of the Rings. The supervisor wouldn’t answer my questions. He just puts it on my head and leaves. Then I open the computer, see the console, the net gets warm, and I hear a little ‘pop’ in my ear, over and over and over. And I think, oh no, tar chera. I’m done.”

“Tar chera?”

“Crazy. Wires ripped in the head.”

Nozick described the experience machine to make a political point. Developing a version of the machine that would actually fit his vision, though, requires lots of trial-and-error. The data-heavy demands of neural networks, and the complexity of the problem they’re attempting to solve, inflate this problem exponentially.

To build an experience machine, OpenAI will need thousands of people like Eliud: workers who, by dint of the womb lottery, have few alternatives to any wage on offer, even if the price is their sanity.

“Sometimes I get very confused,” Eliud said. “I came home from work, yesterday, and I remembered a necklace my grandmother had. It was a pendant, a very beautiful blue pendant. And I remembered the kind of blue it was, then realized it was not really that kind of blue.”

Eliud tapped his temple with his index finger. “I was picturing a color the machine had shown me. The things get in your memories, I think. They get in your dreams.”

For now, the experience machine mostly generates “atomic qualia,” to borrow a phrase from Levin. In the philosophy of mind, qualia are the raw feelings of consciousness: the soursweet tang of a strawberry, the sound of a chord, the particular redness of a sunset. By extension, atomic qualia are the most basic features of experience — the atoms of subjective life. The taste of a strawberry might comprise the atomic qualia of sweetness, sourness, and so forth.

Not everyone agrees that there are such things as atomic qualia. But as a shorthand, it usefully describes the kinds of experiences that Eliud and his colleagues have reported. Fundamental forms of consciousness, colors and sounds and smells: and occasionally, a more complex mixture, a molecular qualia.

Almost undoubtedly, though, the end goal for OpenAI is a machine that can do exactly what Nozick described — generate any conceivable experience using the contours of natural language. We can tell a neural network to reorganize a colony of cells, write computer code, and create paintings. If the project achieves its aim, we will also be able to tell them to change our minds.

It is hard to say whether this aim is within reach, or whether it is possible at all. Dmitriev suspects that it is closer than one might think. “All you need is data and power,” he said. “For solving these kinds of problems, it is that simple.”

“Will it tell us anything about consciousness?” I ask.

“Ha. That will be much more complicated. But I think most consumers can do without the understanding. They’ll be happy enough with their porn.”

Dmitriev, like Nozick, is pessimistic about the effects of the experience machine. He worries that it will accelerate us further into an age of lonely hedonism. This is a common view among intellectuals — barring a few notable exceptions, the post-Nozick tradition has preferred to argue against the worth of false experience.

Eliud wasn’t so sure. When I asked him whether he thought an experience machine would be good or bad for the world, he shrugged. “I don’t know. Maybe it will distract us from real problems. But we already have to distract ourselves so much, all the time. Because life is so hard, you see? Shouldn’t people get to be happy?”

“Has the machine made you happy yet?”

Eliud looked away from the camera for a moment. “Mm. A few times, yes.”

“Can you tell me about one of those times?”

“Well. There was something else I saw today. I did not say it, because it sounds so strange. But I saw a woman’s face.”

“I see,” I replied. “Did you recognize her?”

“No, no. She could not be from here.”

“And seeing her made you happy?”

Eliud smiled. “Yes. She had the most beautiful eyes I have ever seen.”

Thanks to Noah Stark, Somayya Upal, and Ethan Wellerstein for reviewing an earlier draft of this piece.